Manual

Content-type: text/html; charset=UTF-8tup

Section: tup manual (1)Updated: 2024/05/19

Index Return to Main Contents

NAME

tup - the updaterSYNOPSIS

-

tup [--debug-sql] [--debug-fuse] [SECONDARY_COMMAND] [ARGS]

DESCRIPTION

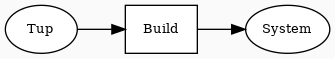

Tup is a file-based build system. You should use tup if you are developing software which needs to be translated from input files (written by you) into a different output format. For example, if you are writing a C program, you could use tup to determine which files need to be recompiled, archived, and linked based on a dependency graph.Tup has no domain specific knowledge. You must tell tup how to build your program, such as by saying that all .c files are converted to .o files by using gcc. This is done by writing one or more Tupfiles.

PRIMARY COMMAND: tup

You can do all of your development with just 'tup', along with writing Tupfiles. See also the INI FILE, OPTIONS FILES and TUPFILES sections below.- tup [<output_1> ... <output_n>]

-

Updates the set of outputs based on the dependency graph and the current state of the filesystem. If no outputs are specified then the whole project is updated. This is what you run every time you make changes to your software to bring it up-to-date. You can run this anywhere in the tup hierarchy, and it will always update the requested output. By default, the list of files that are changed are determined by scanning the filesystem and checking modification times. For very large projects this may be slow, but you can skip the scanning time by running the file monitor (see SECONDARY COMMANDS for a description of the monitor).

-

- -jN

- Temporarily override the updater.num_jobs option to 'N'. This will run up to N jobs in parallel, subject to the constraints of the DAG. Eg: 'tup -j2' will run up to two jobs in parallel, whereas 'tup' will run up to updater.num_jobs in parallel. See the option secondary command below.

- --verbose

- Causes tup to display the full command string instead of just the pretty-printed string for commands that use the ^ TEXT^ prefix.

- --quiet

- Temporarily override the display.quiet option to '1'. See the option secondary command below.

- -k

- --keep-going

- Temporarily override the updater.keep_going option to '1'. See the option secondary command below.

- --no-keep-going

- Temporarily override the updater.keep_going option to '0'. See the option secondary command below.

- --no-scan

- Do not scan the project for changed files. This is for internal tup testing only, and should not be used during normal development.

- --no-environ-check

- Do not check for updates to the environment variables exported to sub-processes. Instead, the environment variables will be used from the database. This is used by the monitor in autoupdate/autoparse mode so that the most recent environment variables are used, rather than the settings when the monitor was initialized.

- -d

- Output debug log to screen.

- --debug-run

- Output the :-rules generated by a run-script. See the 'run ./script args' feature in the TUPFILES section. --debug-logging Save some debug output and build graphs in .tup/log. Graphs are rotated on each invocation with --debug-logging.

-

SECONDARY COMMANDS

These commands are used to modify the behavior of tup or look at its internals. You probably won't need these very often. Secondary commands are invoked as:tup [--debug-sql] [--debug-fuse] SECONDARY_COMMAND [SECONDARY_COMMAND_ARGS]

- init [directory]

-

Creates a '.tup' directory in the specified directory and initializes the tup database. If a directory name is unspecified, it defaults to creating '.tup' in the current directory. This defines the top of your project, as viewed by tup. Everything in the current directory and its subdirectories are known as the "tup hierarchy".

If you want to avoid having to run 'tup init' every time you clone your repository to a new location, you can instead create an empty file called 'Tupfile.ini' in the project root. If 'tup' is run without the '.tup' directory present, it will automatically create one for you as a sibling of the Tupfile.ini file, as if you had run 'tup init' in that directory.. If there are multiple Tupfile.ini files in parent paths, the one highest up the tree will be chosen.

-

- --no-sync

- Sets the 'db.sync' option of the project to '0'. By default the 'db.sync' option will be set to '1'. This flag is mostly used for test cases, but you could also use this in any environment where you want to script tup and use no synchronization by default.

-

- refactor

- ref

-

The refactor command can be used to help refactor Tupfiles. This will cause tup to run through the parsing phase, but not execute any commands. If any Tupfiles that are parsed result in changes to the database, these are reported as errors. For example, we may have the following simple Tupfile:

: foreach *.c |> gcc -c %f -o %o -Wall |> %B.o

After an initial 'tup', we decide that we want to move the -Wall to a variable called CFLAGS. The Tupfile now looks like:

CFLAGS = -Wall : foreach *.c |> gcc -c %f -o %o $(CFLAGS) |> %B.o

Running 'tup refactor' will cause tup to parse the Tupfile, and if we made any mistakes, an error message will be displayed. The Tupfiles can then be modified to fix those errors, and keep running 'tup refactor' until all Tupfiles are parsed successfully.

Errors are reported for adding or removing any of the following: commands, inputs that are generated files, outputs, the .gitignore directive, input or output <groups>, and directory level dependencies. Otherwise, you are able to move any strings out to $-variables, !-macros, and the like, so long as the end-result of the set of :-rules is the same.

- monitor

-

*LINUX ONLY* Starts the inotify-based file monitor. The monitor must scan the filesystem once and initialize watches on each directory. Then when you make changes to the files, the monitor will see them and write them directly into the database. With the monitor running, 'tup' does not need to do the initial scan, and can start constructing the build graph immediately. The "Scanning filesystem..." time from 'tup' is approximately equal to the time you would save by running the monitor. When the monitor is running, a 'tup' with no file changes should only take a few milliseconds (on my machines I get about 2 or 3ms when everything is in the disk cache). If you restart your computer, you will need to restart the monitor. The following arguments can be given on the command line. Any additional arguments not handled by the monitor will be passed along to the updater if either monitor.autoupdate or monitor.autoparse are enabled. For example, you could run the monitor as 'tup monitor -f -a -j2' to run the monitor in the foreground, and automatically update with 2 jobs when changes are detected. See also the option secondary command below.

-

- -d

- Enable debugging of the monitor process.

- -f

- --foreground

- Temporarily override the monitor.foreground option to 1. The monitor will run in the foreground (don't daemonize).

- -b

- --background

- Temporarily override the monitor.foreground option to 0. The monitor will run in the background (daemonize).

- -a

- --autoupdate

- Temporarily override the monitor.autoupdate option to 1. This will automatically run an update when file changes are detected.

- -n

- --no-autoupdate

- Temporarily override the monitor.autoupdate option to 0. This will prevent the monitor from automatically running an update when file changes are detected.

- --autoparse

- Temporarily override the monitor.autoparse option to 1. This will automatically run the parser when file changes are detected.

- --no-autoparse

- Temporarily override the monitor.autoparse option to 0. This will prevent the monitor from automatically running the parser when file changes are detected.

-

- stop

- Kills the monitor if it is running. Basically it saves you the trouble of looking up the PID and killing it that way.

- variant foo.config [bar.config] [...]

-

For each argument, this command creates a variant directory with tup.config symlinked (Windows: copied) to the specified config file. For example, if a directory contained several variant configurations, one could easily create a variant for each config file:

$ ls configs/ bar.config foo.config $ tup variant configs/*.config tup: Added variant 'build-bar' using config file 'configs/bar.config' tup: Added variant 'build-foo' using config file 'configs/foo.config'

This is equivalent to the following:

$ mkdir build-bar $ ln -s ../configs/bar.config build-bar/tup.config $ mkdir build-foo $ ln -s ../configs/foo.config build-foo/tup.config

For projects that commonly use several variants, the files in the configs/ directory could be checked in to source control. Each developer would run the 'tup variant' after 'tup init' during the initial checkout of the software. Variants can also be created manually by making a build directory and creating a tup.config file in it (see the VARIANTS section). This command merely helps save some steps, so that you don't have to make each build directory and tup.config symlink manually.

- dbconfig

- Displays the current tup database configuration. These are internal values used by tup.

- options

-

Displays all of the current tup options, as well as where they originated.

For details on all of the available options and how to set them, see the OPTIONS FILES section below.

- graph [--dirs] [--ghosts] [--env] [--combine] [--stickies] [<output_1> ... <output_n>]

-

Prints out a graphviz .dot format graph of the tup database to stdout. By default it only displays the parts of the graph that have changes. If you provide additional arguments, they are assumed to be files that you want to graph. This operates directly on the tup database, so unless you are running the file monitor you may want to run 'tup scan' first. This is generally used for debugging tup -- you may or may not find it helpful for trying to look at the structure of your program.

-

- --dirs

- Temporarily override the graph.dirs option to '1'. This will show the directory nodes and Tupfiles.

- --ghosts

- Temporarily override the graph.ghosts option to '1'. This will show ghost nodes (files that a command tried to access, but don't actually exist).

- --env

- Temporarily override the graph.environment option to '1'. This will show the environment variables, such as PATH.

-

- todo [<output_1> ... <output_n>]

-

Prints out the next steps in the tup process that will execute when updating the given outputs. If no outputs are specified then it prints the steps needed to update the whole project. Similar to the 'upd' command, 'todo' will automatically scan the project for file changes if a file monitor is not running.

-

- --no-scan

- Do not scan the project for changed files. This is for internal tup testing only, and should not be used during normal development.

- --verbose

- Causes tup to display the full command string instead of just the pretty-printed string for commands that use the ^ TEXT^ prefix.

-

- generate [--config config-file] [--builddir directory] script.sh (or script.bat on Windows) [<output_1> ... <output_n>]

-

The generate command will parse all Tupfiles and create a shell script that can build the program without running in a tup environment. The expected usage is in continuous integration environments that aren't compatible with tup's dependency checking (eg: if FUSE is not supported). On Windows, if the script filename has a ".bat" extension, then the output will be a batch script instead of a shell script. For example:

git clone ... myproj cd myproj tup generate build.sh ./build.sh # Copy out build artifacts / logs here git clean -f -x -d

The shell script does not work incrementally, so it is effectively a one-time use. You will need to clean up the tree before the next 'tup generate' and script invocation. By default, the top-level tup.config file is used to define the configuration variables. Optionally, a separate configuration file can be passed in with the --config argument.If a --builddir argument is specified, the shell script is generated as if it were a variant build. All build artifacts are placed in the directory specified. Otherwise, the default behavior is to place all build artifacts in-tree. The build directory must not already exist in the tree.

After the script name, individual output files can be listed in order to limit the scope of the shell script to only build those outputs. If no outputs are listed, the shell script will build all outputs.

- compiledb

-

The compiledb command creates a compile_commands.json file for use with third-party tools that use that file as a reference to determine how files are compiled. The file is created at the top of the tup hierarchy, or if you are using variants, each variant gets has its own compile_commands.json created at the root of the variant. You must annotate files that you want to export with the ^j flag. For example, you could add the ^j flag to your compiler invocations:

: foreach *.c |> ^j^ gcc -c %f -o %o |> %B.o

Tup automatically parses any out-of-date Tupfiles (equivalent to 'tup parse') before generating the database.

Due to the expense of updating compile_commands.json with large projects, the file is *not* automatically updated when Tupfiles change. You must re-run 'tup compiledb' manually when you want an updated version.

To automatically pull the latest commandline directly from the database instead of using compile_commands.json as an intermediary, see the 'commandline' command.

See also: https://clang.llvm.org/docs/JSONCompilationDatabase.html

- commandline <file_1> [<file_2> ... <file_n>]

-

The commandline command will print out the information needed to compile the filename given as an argument. The information is printed to stdout in the same JSON format as the compile_commands.json file from 'tup compiledb'. Note that as compared to the compiledb command, 'tup commandline' does not automatically parse Tupfiles before attempting to find the command. This simply queries the database directly.

For an example of how to use this, see the .ycm_extra_conf.py file located at the root of the tup git repository: https://github.com/gittup/tup/

- varsed

-

The varsed command is used as a subprogram in a Tupfile; you would not run it manually at the command-line. It is used to read one file, and replace any variable references and write the output to a second file. Variable references are of the form @VARIABLE@, and are replaced with the corresponding value of the @-variable. For example, if foo.txt contains:

The architecture is set to @ARCH@

And you have a :-rule in a Tupfile like so:: foo.txt |> tup varsed %f %o |> foo-out.txt

Then on an update, the output file will be identical to the input file, except the string @ARCH@ will be replaced with whatever CONFIG_ARCH is set to in tup.config. The varsed command automatically adds the dependency from CONFIG_ARCH to the particular command node that used it (so if CONFIG_ARCH changes, the output file will be updated with the new value). - scan

- You shouldn't ever need to run this, unless you want to make the database reflect the filesystem before running 'tup graph'. Scan is called automatically by 'upd' if the monitor isn't running.

- upd

- Legacy secondary command. Calling 'tup upd' is equivalent to simply calling 'tup'.

INI FILE

The tup command can be run from anywhere in the source tree. It uses the information from all Tupfiles (see the TUPFILES section) as well as it's own dynamic database, which is maintained in the .tup directory located at the root of the project. The .tup directory can be created manually with the 'tup init' command, or you can have it run automatically by adding an empty Tupfile.ini file at the root of the project's version control repository.The contents of the Tupfile.ini file are ignored.

OPTIONS FILES

Tup allows for a variety of configuration files. These files affect the behavior of tup as a program, but not tup as a build system. That is to say, changing any of these options should not affect the end result of a successful build, but may affect how tup gets there (e.g. how many compile jobs to run in parallel).The options are read in the following precedence order:

command-line overrides (eg: -j flag passed to 'tup') .tup/options file ~/.tupoptions file /etc/tup/options file tup's compiled in defaults

For Windows, the options files are read in as follows:

command-line overrides .tup/options file tup.ini in the Application Data path (usually C:\ProgramData\tup\tup.ini) tup's compiled in defaults

For an exact list of paths on your platform, type 'tup options'.

All files use the same .ini-style syntax. A section header is enclosed in square brackets, like so:

[updater]

The section header is followed by one or more variable definitions, of the form 'variable = value'. Comments start with a semi-colon and continue to the end of the line. The variable definitions can all be set to integers. For boolean flags, "true"/"yes" and "false"/"no" are synonyms for 1 and 0, respectively. For example, if you have a .tup/options file that contains:

[updater]

num_jobs = 2

keep_going = true

Then 'tup' will default to 2 jobs, and have the updater.keep_going flag set. The options listed below are of the form 'section.variable', so to set 'db.sync' you would need a '[db]' section followed by 'sync = 0', for example. The defaults listed here are the compiled-in defaults.

-

- db.sync (default '1')

- Set to '1' if the SQLite synchronous feature is enabled. When enabled, the database is properly synchronized to the disk in a way that it is always consistent. When disabled, it will run faster since writes are left in the disk cache for a time before being written out. However, if your computer crashes before everything is written out, the tup database may become corrupted. See http://www.sqlite.org/pragma.html for more information.

- updater.num_jobs (defaults to the number of processors on the system )

- Set to the maximum number of commands tup will run simultaneously. The default is dynamically determined to be the number of processors on the system. If updater.num_jobs is greater than 1, commands will be run in parallel only if they are independent. See also the -j option.

- updater.keep_going (default '0')

- Set to '1' to keep building as much as possible even if errors are encountered. Anything dependent on a failed command will not be executed, but other independent commands will be. The default is '0', which will cause tup to stop after the first failed command. See also the -k option.

- updater.full_deps (defaults to '0')

- Set to '1' to track dependencies on files outside of the tup hierarchy. The default is '0', which only tracks dependencies within the tup hierarchy. For example, if you want all C files to be re-compiled when gcc is updated on your system, you should set this to '1'. In Linux and OSX, using full dependencies requires that the tup binary is suid as root so that it can run sub-processes in a chroot environment. Alternatively on Linux, if your kernel supports user namespaces, then you don't need to make the binary suid. Note that if this value is set to '1' from '0', tup will rebuild the entire project. Disabling this option when it was previously enabled does not require a full rebuild, but does take some time since the nodes representing external files are cleared out. NOTE: This does not currently work with ccache or other programs that may write to external files due to issues with locking. This may be fixed in the future.

- updater.warnings (defaults to '1')

- Set to '0' to disable warnings about writing to hidden files. Tup doesn't track files that are hidden. If a sub-process writes to a hidden file, then by default tup will display a warning that this file was created. By disabling this option, those warnings are not displayed. Hidden filenames (or directories) include: ., .., .tup, .git, .hg, .bzr, .svn.

- display.color (default 'auto')

- Set to 'never' to disable ANSI escape codes for colored output, or 'always' to always use ANSI escape codes for colored output. The default is 'auto', which displays uses colored output if stdout is connected to a tty, and uses no colors otherwise (ie: if stdout is redirected to a file).

- display.width (defaults to the terminal width)

- Set to any number 10 or larger to force a fixed width for the progress bar. This is assumed to be the total width, some of which is used for spacing, brackets, and the percentage complete. If this value is less than 10, the progress bar is disabled.

- display.progress (defaults to '1' if stdout is a TTY)

- Set to '1' to enable the progress bar, or '0' to turn it off. By default it is enabled if stdout is a TTY, and disabled if stdout is not a TTY.

- display.job_numbers (default '1')

- Set to '0' to avoid displaying the "N) " string before the results of a job. The default is to display this number.

- display.job_time (default '1')

- Set to '0' to avoid displaying the runtime of a job along with the results. The default is to display the runtime. Note that the runtime displayed includes the time that tup takes to save the dependencies. Therefore, this runtime will likely be larger than the runtime when executing the same job manually in the shell.

- display.quiet (default '0')

- Set to '1' to prevent tup from displaying most output. Tup will still display a banner and output from any job that writes to stdout/stderr, or any job that returns a non-zero exit code. The progress bar is still displayed; see also display.progress for really quiet output.

- monitor.autoupdate (default '0')

- Set to '1' to automatically rebuild if a file change is detected. This only has an effect if the monitor is running. The default is '0', which means you have to type 'tup' when you are ready to update.

- monitor.autoparse (default '0')

- Set to '1' to automatically run the parser if a file change is detected. This is similar to monitor.autoupdate, except the update stops after the parser stage - no commands are run until you manually type 'tup'. This only has an effect if the monitor is running. Note that if both autoupdate and autoparse are set, then autoupdate takes precedence.

- monitor.foreground (default '0')

- Set to '1' to run the monitor in the foreground, so control will not return to the terminal until the monitor is stopped (either by ctrl-C in the controlling terminal, or running 'tup stop' in another terminal). The default is '0', which means the monitor will run in the background.

- graph.dirs (default '0')

- Set to '1' and the 'tup graph' command will show the directory nodes and their ownership links. Tupfiles are also displayed, since they point to directory nodes. By default directories and Tupfiles are not shown since they can clutter the graph in some cases, and are not always useful.

- graph.ghosts (default '0')

- Set to '1' to show ghost nodes. Some commands may try to read from many files that don't exist, causing ghost nodes to be created. By default, ghosts are not shown to make the graph easier to understand.

- graph.environment (default '0')

- Set to '1' to show the environment nodes (such as PATH) and their dependencies. By default the environment variables are not shown since nearly everything will depend on PATH.

- graph.combine (default '0')

- Set to '1' to try to combine similar nodes in the graph. For example, instead of showing 10 separate compilation commands that all have one .c file input and one .o file output, this will combine them into one command to more easily see the whole structure of the graph. By default all nodes are shown separately.

TUPFILES

You must create a file called "Tupfile" anywhere in the tup hierarchy that you want to create an output file based on the input files. The input files can be anywhere else in the tup hierarchy, but the output file(s) must be written in the same directory as the Tupfile.- : [foreach] [inputs] [ | order-only inputs] |> command |> [outputs] [ | extra outputs] [<group>] [{bin}]

-

The :-rules are the primary means of creating commands, and are denoted by the fact that the ':' character appears in the first column of the Tupfile. The syntax is supposed to look somewhat like a pipeline, in that the input files on the left go into the command in the middle, and the output files come out on the right.

-

- foreach

-

This is either the actual string "foreach", or it is empty. The distinction is in how many commands are generated when there are multiple input files. If "foreach" is specified, one command is created for each file in the inputs section. If it is not specified, one command is created containing all of the files in the inputs section. For example, the following Tupfiles are equivalent:

# Tupfile 1 : foo.c |> gcc -c foo.c -o foo.o |> foo.o : bar.c |> gcc -c bar.c -o bar.o |> bar.o # Tupfile 2 : foreach foo.c bar.c |> gcc -c %f -o %o |> %B.o

Additionally, using "foreach" allows the use of the "%e" flag (see below). - inputs

-

The input files for the command. An input file can be anywhere in the tup hierarchy, and is specified relative to the current directory. Input files affect the %-flags (see below). Wildcarding is supported within a directory by using the SQLite glob function. The special glob characters are '*', '?', and '[]'. For example, "*.c" would match any .c file, "fo?.c" would match any 3-character .c file that has 'f' and 'o' as the first two characters, and "fo[xyz].c" would match fox.c, foy.c, and foz.c. Globbing does not match directories, so "src/*.c" will work, but "*/*.c" will not.

Any inputs starting with '^' are Perl-compatible regular expressions used to exclude files that were matched in a previous glob. For example, you could compile all *.c files except main.c with the following fragment:

: foreach *.c ^main.c |> ... |>

These input exclusions are handled at parser time, and are distinct from the dependency exclusions in the outputs section.

- order-only inputs

-

These are also used as inputs for the command, but will not appear in any of the %-flags except %i. They are separated from regular inputs by use of the '|' character. In effect, these can be used to specify additional inputs to a command that shouldn't appear on the command line. Globbing is supported as in the inputs section. For example, one use for them is to specify auto-generated header dependencies:

: |> echo "#define FOO 3" > %o |> foo.h : foreach foo.c bar.c | foo.h |> gcc -c %f -o %o |> %B.o

This will add the foo.h dependency to the gcc commands for foo.c and bar.c, so tup will know to generate the header before trying to compile. The foreach command will iterate over the regular inputs (here, foo.c and bar.c), not the order-only inputs (foo.h). If you forget to add such a dependency, tup will report an error when the command is executed. Note that the foo.h dependency is only listed here because it is created by another command -- normal headers do not need to be specified. - command

- The command string that will be passed to the system(3) call by tup. This command is allowed to read from any file specified as an input or order-only input, as well as any other file in the tup hierarchy that is not the output of another command. In other words, a command cannot read from another command's output unless it is specified as an input. This restriction is what allows tup to be parallel safe. Additionally, the command must write to all of the output files specified by the "outputs" section, if any.

- When executed, the command's file accesses are monitored by tup to ensure that they conform to these rules. Any files opened for reading that were generated from another command but not specified as inputs are reported as errors. Similarly, any files opened for writing that are not specified as outputs are reported as errors. All files opened for reading are recorded as dependencies to the command. If any of these files change, tup will re-execute the command during the next update. Note that if an input listed in the Tupfile changes, it does not necessarily cause the command to re-execute, unless the command actually read from that input during the prior execution. Inputs listed in the Tupfile only enforce ordering among the commands, while file accesses during execution determine when commands are re-executed.

-

A command string can begin with the special sequence ^ TEXT^, which will tell tup to only print "TEXT" instead of the whole command string when the command is being executed. This saves the effort of using echo to pretty-print a long command. The short-display behavior can be overridden by passing the --verbose flag to tup, which will cause tup to display the actual command string instead of "TEXT". The space after the first '^' is significant. Any characters immediately after the first '^' are treated as flags. See the ^-flags section below for details. For example, this command will print "CC foo.c" when executing system(gcc -c foo.c -o foo.o) :

: foo.c |> ^ CC %f^ gcc -c %f -o %o |> foo.o

- A command string can also begin with the special character '!', in which case the !-macro specified will be substituted in for the actual command. See the !-macro definition later. Commands can also be blank, which is useful to put all the input files in a {bin} for a later rule.

- outputs

-

The outputs section specifies the files that will be written to by the command. Only one command can write to a specific file, but a single command can output multiple files (such as how a bison command will output both a .c and .h file). The output can use any %-flags except %o. Once a file is specified in an output section, it is put into the tup database. Any following rules can use that file as an input, even if it doesn't exist in the filesystem yet.

Outputs starting with '^' are used to ignore dependencies on files. These exclusions are Perl-compatible regular expressions that are stored in the database. Any files that are read or written to when the sub-process executes that matches these patterns are ignored by tup. For example, if your compiler writes to a 'license_file.txt', you could instruct tup to ignore dependency errors on this file like so:

: foreach *.c |> ... |> %B.o ^license_file.txt

Note that this also instructs tup to ignore input dependencies on the file. If you wish to merely skip files in a glob, see the similar '^' syntax in the inputs section.

An exclusion cannot be used to match file generated by the same rule (ie: one listed as an output in the command). Doing so will result in an "Unable to exclude a generated file" error.

You should only ignore dependencies with extreme caution. It is trivial to cause tup to fail to update when it should have because it's been instructed to ignore dependencies.

- extra-outputs

- The extra-outputs section is similar to the order-only inputs section. It is separated from the regular outputs by the '|' character. The extra-outputs behave exactly as regular outputs, except they do not appear in the %o flag. These can be used if a command generates files whose names do not actually appear in the command line. If there is exactly one output specified by the rule, the extra-outputs section can use the %O flag to represent the basename of the output. This can be useful in extra-outputs for !-macros.

- <group>

-

Output files can be grouped into global groups by specifying a <group> after the outputs but before a bin. Groups allow for order-only dependencies between folders. Note that groups are directory specific, however, so when referring to a group you must specify the path to where it is assigned. For example, if a main project depends on the output from several submodules you can structure Tup like so to make sure the submodules are built before the main project:

#./submodules/sm1/Tupfile : foo.c |> gcc -c %f -o %o |> %B.o ../<submodgroup> #./submodules/sm2/Tupfile : bar.c |> gcc -c %f -o %o |> %B.o ../<submodgroup> #./project/Tupfile : baz.c | ../submodules/<submodgroup> |> gcc -c %f -o %o |> %B.o

Notice how groups are directory specific and the path is specified outside of the <>. By specifying the <submodgroup> as an order-only input Tup will build the submodules before attempting to build the entire project. - {bin}

-

Outputs can be grouped into a bin using the "{bin}" syntax. A later rule can use "{bin}" as an input to use all of the files in that bin. For example, the foreach rule will put each .o file in the objs bin, which is used as an input in the linker rule:

: foreach *.c |> gcc -c %f -o %o |> %B.o {objs} : {objs} |> gcc %f -o %o |> program - In this case one could use *.o as the input instead, but sometimes it is useful to separate outputs into groups even though they have the same extension (such as if one directory creates multiple binaries, using *.o wouldn't be correct). If a {bin} is specified in the output section of multiple rules, the bin will be the union of all the outputs. You can't remove things from a bin, and the bin disappears after the current Tupfile is parsed.

-

- ^-flags

-

In a command string that uses the ^ TEXT^ sequence, flag characters can be placed immediately after the ^ until the first space character or closing caret. For example:

: foo.c |> ^c CC %f^ gcc --coverage %f -o %o |> foo | foo.gcno : bar.c |> ^c^ gcc --coverage %f -o %o |> bar | bar.gcno

In the foo.c case, the command requires namespaces (or suid) and will display "CC foo.c". In the bar.c case, the command requires namespaces (or suid) and the "gcc --coverage bar.c -o bar" string is displayed. These are the supported flag characters:-

- b

- The 'b' flag causes the command to be run via "/usr/bin/env bash -e -o pipefail -c <command>" instead of the default "/bin/sh -e -c <command>". In addition to allowing bash extensions in the :-rule, "-o pipefail" dictates that "the return value of a pipeline is the value of the last (rightmost) command to exit with a non-zero status, or zero if all commands in the pipeline exit successfully."

- c

- The 'c' flag causes the command to fail if tup does not support user namespaces (on Linux) or is not suid root. In these cases, tup runs in a degraded mode where the fake working directories are visible in the sub-processes, and some dependencies may be missed. If these degraded behaviors will break a particular command in your build, add the 'c' flag so that users know they need to add the suid bit or upgrade their kernel. This flag is ignored on Windows.

- j

- The 'j' flag marks the command for export in the 'tup compiledb' command. If you are interested in using compile_commands.json, annotate the commands that you want to export with ^j and then run 'tup compiledb'. See the compiledb command for more details.

- o

- The 'o' flag causes the command to compare the new outputs against the outputs from the previous run. Any outputs that are the same will not cause dependent commands in the DAG to be executed. For example, adding this flag to a compilation command will skip the linking step if the object file is the same from the last time it ran. The 'o' flag is incompatible with the 't' flag.

- s

-

The 's' flag disables buffering of stdout/stderr for the subprocesses and enables "streaming mode". When streaming, stdout/stderr are inherited from the tup process, so messages would typically be displayed on the terminal while the subprocess is running in whatever order they are generated. Note that processes with 's' enabled may display messages interleaved with each other, as well as with tup's progress bar or other tup messages. This flag may be useful for long-running processes where you wish to see the output as it occurs, though it can make for confusing logs if it is used for many noisy commands that may run in parallel.

By default, tup will buffer a command's messages (for example, compiler warning messages) and display them under the banner for the command after it completes. Messages from separate commands are therefore always distinct and associated with the command that created them.

- t

-

The 't' flag causes the command's outputs to be transient. The outputs may be used as inputs to other commands, but after all dependent commands are executed, the transient outputs will be deleted from the filesystem. This can be used to save space if there are many stages of processing that each produce large outputs, but only the final output needs to be kept. The 't' flag is incompatible with the 'o' flag.

An example where the 't' flag can make sense in a build pipeline is if there are large assets that go through multiple stages of processing. For example, a large audio or video file that has stages of effects applied, each as a separate step in the Tupfile.

In contrast, the 't' flag does *not* make sense for object files in a C program, even though those could theoretically be deleted after the final executable is linked. If the object files were marked transient in this case, a change to any of the input C files would require *all* object files to be rebuilt in order to produce the executable, instead of only the single file that was changed.

-

- %-flags

-

Within a command string or output string, the following %-flags may also be used to substitute values from the inputs or outputs. In general, %-flags expand to all filenames in a list. For example, %o expands to all output files. To refer to a single file, you can use %1o, %2o, etc to refer to the first or second (and so on) filename in the list.

-

- %%

- Expands to a single "%" character in the command string. This should be used when you want the percent character to be interpreted by the command itself rather than by tup's parser.

- %f

- The filenames from the "inputs" section. This includes the path and extension. This is most useful in a command, since it lists each input file name with the path relative to the current directory. For example, "src/foo.c" would be copied exactly as "src/foo.c". Individual files can be referenced with %1f, %2f, etc.

- %i

- The filenames from the "order-only inputs" section. This includes the path and extension. Usually order-only inputs are used for filenames that you don't want to appear in the command-line, but if you need to refer to them, you can use %i. Individual files can be referenced with %1i, %2i, etc.

- %b

- Like %f, but is just the basename of the file. The directory part is stripped off. For example, "src/foo.c" would become "foo.c". Individual files can be referenced with %1b, %2b, etc.

- %B

- Like %b, but strips the extension. This is most useful in converting an input file into an output file of the same name but with a different extension, since the output file needs to be in the same directory. For example, "src/foo.c" would become "foo". Individual files can be referenced with %1B, %2B, etc.

- %e

- The file extension of the current file when used in a foreach rule. This can be used for variables that can have different values based on the suffix of the file. For example, you could set certain flags for assembly (.S) files that are different from .c files, and then use a construct like $(CFLAGS_%e) to reference the CFLAGS_S or CFLAGS_c variable depending on what type of file is being compiled. For example, "src/foo.c" would become "c", while "src/foo.S" would become "S"

- %o

- The name of the output file(s). It is useful in a command so that the filename passed to a command will always match what tup thinks the output is. This only works in the "command" section, not in the "outputs" section. Individual files can be referenced with %1o, %2o, etc.

- %O

-

The name of the output file without the extension. This only works in the extra-outputs section if there is exactly one output file specified. A use-case for this is if you have a !-macro that generates files not specified on the command line, but are based off of the output that is named. For example, if a linker creates a map file by taking the specified output "foo.so", removing the ".so" and adding ".map", then you may want a !-macro like so:

!ldmap = |> ld ... -o %o |> | %O.map : foo1.o foo2.o |> !ldmap |> foo.so

- %d

-

The name of the lowest level of the directory. For example, in foo/bar/Tupfile, this would be the string "bar". One case where this can be useful is in naming libraries based on the directory they are in, such as with the following !-macro:

!ar = |> ar crs %o %f |> lib%d.a

Using this macro in foo/bar/Tupfile would then create foo/bar/libbar.a - %g

-

The string that a glob operator matched. For example with the files a_text.txt and b_text.txt, the rule:

: foreach *_text.txt |> foo %f |> %g_binary.bin

will output the filenames a_binary.bin and b_binary.bin. Only the first glob expanded will be substituted in for %g. %g is only valid when there is a single input file or foreach is used. - %<group>

-

All of the files in "group". For example:

#./submodules/sm1/Tupfile : foo.c |> gcc -c %f -o %o |> %B.o ../<submodgroup> #./submodules/sm2/Tupfile : bar.c |> gcc -c %f -o %o |> %B.o ../<submodgroup> #./project/Tupfile : ../submodules/<submodgroup> |> echo '%f' > %o |> submodules_f.txt : ../submodules/<submodgroup> |> echo '%<submodgroup>' > %o |> submodules_group.txt

will produce "../submodules/<submodgroup>" in submodules_f.txt, but "../submodules/sm1/foo.o ../submodules/sm2/bar.o" in submodules_group.txt. If the input contains multiple groups with the same name but different directories, %<group> will be expanded to all of the files in each listed group.

-

- var = value

- var := value

-

Set the $-variable "var" to the value on the right-hand side. Both forms are the same, and are allowed to more easily support converting old Makefiles. The $-variable "var" can later be referenced by using "$(var)". Variables referenced here are always expanded immediately. As such, setting a variable to have a %-flag does not make sense, because a %-flag is only valid in a :-rule. The syntax $(var_%e) is allowed in a :-rule. Variable references do not nest, so something like $(var1_$(var2)) does not make sense. You also cannot pass variable definitions in the command line or through the environment. Any reference to a variable that has not had its value set returns an empty string.

CFLAGS = -Dfoo : bar.c |> cc $(CFLAGS) $(other) -o %o -c %f |> %B.o

will generate the command "cc -Dfoo -o bar.o -c bar.c" when run.

Any $-variable that begins with the string "CONFIG_" is automatically converted to the @-variable of the same name minus the "CONFIG_" prefix. In other words, $(CONFIG_FOO) and @(FOO) are interchangeable. Attempting to assign a value to a CONFIG_ variable in a Tupfile results in an error, since these can only be set in the tup.config file.

Note that you may see a syntax using back-ticks when setting variables, such as:

CFLAGS += `pkg-config fuse3 --cflags`Tup does not do any special processing for back-ticks, so the pkg-config command is not actually executed when the variable is set in this example. Instead, this is passed verbatim to any place that uses it. Therefore if a command later references $(CFLAGS), it will contain the string `pkg-config fuse3 --cflags`, so it will be parsed by the shell.

- var += value

- Append "value" to the end of the current value of "var". If "var" has not been set, this is equivalent to a regular '=' statement. If "var" already has a value, a space is appended to the $-variable before the new value is appended.

- $(TUP_CWD)

- [lua] tup.getcwd()

- The special $-variable TUP_CWD is always set to the path relative to the Tupfile currently parsed. It can change value when including a file in a different directory. For example, if you "include ../foo.tup", then TUP_CWD will be set to ".." when parsing foo.tup. This lets foo.tup specify flags like "CFLAGS += -I$(TUP_CWD)", and CFLAGS will always have the -I directory where foo.tup is located, no matter if it was included as "../foo.tup" or "../../foo.tup" or "subdir/foo.tup". For an alternative to $(TUP_CWD) when referring to files, see the section on &-variables below.

- $(TUP_VARIANTDIR)

- [lua] tup.getvariantdir()

- Similar to $(TUP_CWD), $(TUP_VARIANTDIR) is set to be the path relative to the Tupfile currently parsed, but points to the corresponding directory in the variant. As an example where this might be useful, suppose your build generates common headers into a directory called "include/". A Tuprules.tup file at the level of that directory could add -I$(TUP_VARIANTDIR) to a compiler flags variable. If a variant is used, this will evaluate to a path like "../build/path/to/include", where "build" is the name of the variant directory. If variants aren't used, TUP_VARIANTDIR is equivalent to TUP_CWD.

- $(TUP_VARIANT_OUTPUTDIR)

- [lua] tup.getvariantoutputdir()

- $(TUP_VARIANT_OUTPUTDIR) always points to the output directory corresponding to the variant currently being parsed. Unlike $(TUP_VARIANTDIR), it does not change when including another file like Tuprules.tup or other partial *.tup files. Without variants, this always evaluates to the string "." to point to the current directory.

No other special $-variables exist yet, but to be on the safe side you should assume that all variables named TUP_* are reserved.

- &var = file

- &var := file

- &var += file

-

Set the &-variable to refer to the given file or directory. The file must be a normal file, not a generated file (an output from a :-rule). &-variables are used to refer to files in a similar way as $(TUP_CWD), except that instead of storing the relative path to the file, &-variables store tup's internal ID of the file. This means that the relative path to the file is determined when the &-variable is used, rather than when the variable is assigned as is the case with $(TUP_CWD). &-variables can only be used in the following locations: :-rule inputs, :-rule order-only inputs, :-rule commands, include lines, and run-script lines, and they can later be referenced by using "&(var)".

# Tuprules.tup &libdir = src/lib !cc = |> cc -I&(libdir) -c %f -o %o |> %B.o # src/lib/Tupfile : foreach *.c |> !cc |> : *.o |> ar crs %o %f |> libstuff.a # src/lib/test/Tupfile : test_stuff.c |> !cc |> : test_stuff.o &(libdir)/libstuff.a |> cc -o %o %f |> test_stuff # src/Tupfile : main.c |> !cc |> main.o : main.o &(libdir)/libstuff.a |> cc -o %o %f |> main_app

will generate the following build.sh commands (via "tup generate build.sh"):cd src/lib cc -I. -c lib1.c -o lib1.o cc -I. -c lib2.c -o lib2.o ar crs libstuff.a lib1.o lib2.o cd test cc -I.. -c test_stuff.c -o test_stuff.o cc -o test_stuff test_stuff.o ../libstuff.a cd ../.. cc -Ilib -c main.c -o main.o cc -o main_app main.o lib/libstuff.a

- ifeq (lval,rval)

-

Evaluates the 'lval' and 'rval' parameters (ie: substitutes all $-variables and @-variables), and does a string comparison to see if they match. If so, all lines between the 'ifeq' and following 'endif' statement are processed; otherwise, they are ignored. Note that no whitespace is pruned for the values - all text between the '(' and ',' comprise 'lval', and all text between the ',' and ')' comprise 'rval'. This means that ifeq (foo, foo) is false, while ifeq (foo,foo) is true. This is for compatibility with Makefile if statements.

ifeq (@(FOO),y) CFLAGS += -DFOO else CFLAGS += -g endif

- ifneq (lval,rval)

- Same as 'ifeq', but with the logic inverted.

- ifdef VARIABLE

-

Tests of the @-variable named VARIABLE is defined at all in tup.config. If so, all lines between the 'ifdef' and following 'endif' statement are processed; otherwise, they are ignored. For example, suppose tup.config contains:

CONFIG_FOO=n

Then 'ifdef FOO' will evaluate to true. If tup.config doesn't exist, or does not set CONFIG_FOO in any way, then 'ifdef FOO' will be false. - ifndef VARIABLE

- Same as 'ifdef', but with the logic inverted.

- else

- Toggles the true/false-ness of the previous if-statement.

- endif

- Ends the previous ifeq/ifdef/ifndef. Note that only 8 levels of nesting if-statements is supported.

- error [message]

- Causes tup to stop parsing and fail, printing message to the user as explanation.

- !macro = [inputs] | [order-only inputs] |> command |> [outputs]

-

Set the !-macro to the given command string. This syntax is very similar to the :-rule, since a !-macro is basically a macro for those rules. The !-macro is not expanded until it is used in the command string of a :-rule. As such, the primary use of the !-macro is to have a place to store command strings with %-flags that may be re-used. For example, we could have a !cc macro in a top-level Tuprules.tup file like so:

!cc = |> ^ CC %f^ gcc -c %f -o %o |>

A Tupfile could then do as follows:include_rules : foreach *.c |> !cc |> %B.o

You will only want to specify the output parameter in either the !-macro or the :-rule that uses it, but not both. If you specify any inputs in the !-macro, they would usually be order-only inputs. For example, if you have a !cc rule where you are using a compiler that has been generated by tup, you can list the compiler file in the order-only list of the !-macro. The compiler file will then become an input dependency for any :-rule that uses the macro. - include file

- Reads the specified file and continues parsing almost as if that file was pasted inline in the current Tupfile. Only regular files are allowed to be included -- attempting to include a generated file is an error. Any include statements that occur in the included file will be parsed relative to the included file's directory.

- include_rules

- Reads in Tuprules.tup files up the directory chain. The first Tuprules.tup file is read at the top of the tup hierarchy, followed by the next subdirectory, and so on through to the Tuprules.tup file in the current directory. In this way, the top-level Tuprules.tup file can specify general variable settings, and subsequent subdirectories can override them with more specific settings. You would generally specify include_rules as the first line in the Tupfile. The name is a bit of a misnomer, since you would typically use Tuprules.tup to define variables rather than :-rules.

- run ./script args

-

Runs an external script with the given arguments to generate :-rules. This is an advanced feature that can be used when the standard Tupfile syntax is too simplistic for a complex program. The script is expected to write the :-rules to stdout. No other Tupfile commands are allowed - for example, the script cannot create $-variables or !-macros, but it can output :-rules that use those features. As a simple example, consider if a command must be executed 5 times, but there are no input files to use tup's foreach keyword. An external script called 'build.sh' could be written as follows:

#! /bin/sh -e for i in `seq 1 5`; do echo ": |> echo $i > %o |> $i.txt" doneA Tupfile can then be used to get these rules:run ./build.sh

Tup will then treat this as if a Tupfile was written with 5 lines like so:: |> echo 1 > %o |> 1.txt : |> echo 2 > %o |> 2.txt : |> echo 3 > %o |> 3.txt : |> echo 4 > %o |> 4.txt : |> echo 5 > %o |> 5.txt

Since the Tupfile-parsing stage is watched for dependencies, any files that this script accesses within the tup hierarchy will cause the Tupfile to be re-parsed. There are some limitations, however. First, the readdir() call is instrumented to return the list of files that would be accessible at that time that the run-script starts executing. This means the files that you see in 'ls' on the command-line may be different from the files that your script sees when it is parsed. Tup essentially pretends that the generated files don't exist until it parses a :-rule that lists it as an output. Note that any :-rules executed by the run-script itself are not parsed until the script executes successfully. Second, due to some structural limitations in tup, the script cannot readdir() on any directory other than the directory of the Tupfile. In other words, a script can do 'for i in *.c', but not 'for i in sub/*.c'. The '--debug-run' flag can be passed to 'tup' in order to show the list of :-rules that tup receives from the script. Due to the readdir() instrumentation, this may be different than the script's output when it is run manually from the command-line. - preload directory

-

By default, a run-script can only use a readdir() (ie: use a wild-card) on the current directory. To specify a list of other allowable wild-card directories, use the preload keyword. For example, if a run script needs to look at *.c and src/*.c, the src directory needs to be preloaded:

preload src run ./build.sh *.c src/*.c

- export VARIABLE

-

The export directive adds the environment variable VARIABLE to the export list for future :-rules and run-scripts. The value for the variable comes from tup's environment, not from the Tupfile itself. Generally this means you will need to set the variable in your shell if you want to change the value used by commands and scripts. By default only PATH is exported. Windows additionally exports several variables suitable for building with the Visual Studio compiler suite. Tup will check the exported environment variables to see if they have changed values between updates, and re-execute any commands that that use those environment variables. Note that this means if PATH is changed, all commands will run again. For example:

: |> command1 ... |> export FOO : |> command2 ... |>

Tup will save the current value of FOO and pass it to the environment when executing command2. If FOO has a different value during the next update, then command2 will execute again with the new value in the environment. In this example, command1 will not have FOO in its environment and will not re-execute when its value changes.

Note that the FOO above is passed to the environment; it is not provided as an internal variable within tup. Thus, given the following:

export FOO : |> echo myFOO=$(FOO) envFOO=${FOO} > %o |> foo.txtwhen run as "$ FOO=silly tup" would result in the contents of the foo.txt file being "myFOO= envFOO=silly". If the "export FOO" was removed from the Tupfile, the contents of the file would be "myFOO= envFOO=" because tup does not propagate environment variables unless they are explicitly exported. This helps preserve repeatable and deterministic builds.If you wish to export a variable to a specific value rather than get the value from the environment, you can do that in your shell instead of through tup. For example, in Linux you can do:

: |> FOO=value command ... |>

This usage will not create a dependency on the environment variable FOO, since it is controlled through the Tupfile.

- import VARIABLE[=default]

-

The import directive sets a variable inside the Tupfile that has the value of the environment variable. If the environment variable is unset, the default value is used instead if provided. This introduces a dependency from the environment variable to the Tupfile, so that if the environment variable changes, the Tupfile is re-parsed. For example:

import CC=gcc : foreach *.c |> $(CC) -c %f -o %o |> %B.o

This will compile all .c files with the compiler defined in the CC environment variable. If CC is not set in the environment, it will use gcc as the default. On a subsequent build, running 'CC=clang tup' will re-parse this Tupfile and re-build all .c files with clang instead (since the commandlines have changed).Unlike 'export', the import command does not pass the variables to the sub-process's environment. In the previous example, the CC environment variable is therefore not set in the subprocess, unless 'export CC' was also in the Tupfile.

- .gitignore

- Tells tup to automatically generate a .gitignore file in the current directory which contains a list of the output files that are generated by tup. This can be useful if you are using git, since the set of files generated by tup matches exactly the set of files that you want git to ignore. If you are using Tuprules.tup files, you may just want to specify .gitignore in the top-level Tuprules.tup, and then have every other Tupfile use include_rules to pick up the .gitignore definition. In this way you never have to maintain the .gitignore files manually. Note that you may wish to ignore other files not created by tup, such as temporary files created by your editor. In this case case you will want to setup a global gitignore file using a command like 'git config --global core.excludesfile ~/.gitignore', and then setup ~/.gitignore with your personal list. For other cases, you can also simply add any custom ignore rules above the "##### TUP GITIGNORE #####" line.

- #

-

At the beginning of a line, a '#' character signifies a comment. A comment line is ignored by the parser. The comment can have leading whitespaces that is also ignored. If there is any non-whitespace before a '#' character, then the line is not a comment. It also means that if a previous line ended with '\' (line wrap) then '#' is interpreted as a regular symbol.

TUPFILE NOTES

Variable expansion in tup is immediate in every case except for !-macros. That is, if you see a :-rule or variable declaration, you can substitute the current values for the variables. The !-macros are only parsed when they used in a :-rule. In that case, the actual :-rule is a sort of a union between the :-rule as written and the current value of the !-macro.When tup parses a Tupfile, it makes a single pass through the file, parsing a line at a time. At the end of the Tupfile, all variable, !-macro, and {bin} definitions are discarded. The only lingering effects of parsing a Tupfile are the command nodes and dependencies that now exist in the tup database. Additionally, a .gitignore file may have been created if requested by the Tupfile.

@-VARIABLES

@-variables are special variables in tup. They are used as configuration variables, and can be read by Tupfiles or used by the varsed command. Commands are able to read them too, but the program executed by the command has to have direct knowledge of the variables. @-variables are specified in the tup.config file at the top of the tup hierarchy or in a variant directory. For example, tup.config may contain:CONFIG_FOO=yA Tupfile may then read the @-variable like so:

srcs-@(FOO) += foo.c srcs-y += bar.c : foreach $(srcs-y) |> gcc -c %f -o %o |> %B.oIn this example, if CONFIG_FOO is set to 'y', then the foo.c file will be included in the input list and therefore compiled. If CONFIG_FOO is unspecified or set to some other value, foo.c will not be included.

The @-variables can be used similar to $-variables, with the following distinctions: 1) @-variables are read-only in Tupfiles, and 2) @-variables are in the DAG, which means reading from them creates a dependency from the @-variable to the Tupfile. Therefore any Tupfile that reads @(FOO) like the above example will be reparsed if the value of CONFIG_FOO in tup.config changes.

The reason for prefixing with "CONFIG_" in the tup.config file is to maintain compatibility with kconfig, which can be used to generate this file.

Note that the syntax for tup.config is fairly strict. For a statement like "CONFIG_FOO=y", tup will create an @-variable using the string starting after "CONFIG_", and up to the '=' sign. The value is everything immediately after the '=' sign until the newline, but if there is a surrounding pair of quotes, they are stripped. In this example, it would set "FOO" to "y". Note that if instead the line were "CONFIG_FOO = y", then the variable "FOO " would be set to " y".

In tup.config, comments are determined by a '#' character in the first column. These are ignored, unless the comment is of the form:

# CONFIG_FOO is not setIn this case, the @-variable "FOO" is explicitly set to "n".

- @(TUP_PLATFORM)

- TUP_PLATFORM is a special @-variable. If CONFIG_TUP_PLATFORM is not set in the tup.config file, it has a default value according to the platform that tup itself was compiled in. Currently the default value is one of "linux", "solaris", "macosx", "win32", "freebsd" or "netbsd".

- @(TUP_ARCH)

-

TUP_ARCH is another special @-variable. If CONFIG_TUP_ARCH is not set in the tup.config file, it has a default value according to the processor architecture that tup itself was compiled in. Currently the default value is one of "i386", "x86_64", "powerpc", "powerpc64", "ia64", "alpha", "sparc", "riscv32", "riscv64", "arm64", "arm" or "s390x".

VARIANTS

Tup supports variants, which allow you to build your project multiple times with different configurations. Perhaps the most common case is to build a release and a debug configuration with different compiler flags, though any number of variants can be used to support whatever configurations you like. Each variant is built in its own directory distinct from each other and from the source tree. When building with variants, the in-tree build is disabled. To create a variant, make a new directory and create a "tup.config" file there. For example:$ mkdir build-default $ touch build-default/tup.config $ tup

Here we created a directory called "build-default" and made an empty tup.config inside. Upon updating, tup will parse all of the Tupfiles using the configuration file we created, and place all build products within subdirectories of build-default that mirror the source tree. We could then create another variant like so:

$ mkdir build-debug $ echo "CONFIG_MYPROJ_DEBUG=y" > build-debug/tup.config $ tup

This time all Tupfiles will be parsed with @(MYPROJ_DEBUG) set to "y", and all build products will be placed in the build-debug directory. Note that setting @(MYPROJ_DEBUG) only has any effect if the variable is actually used in a Tupfile (perhaps by adding debug flags to the compiler command-line).

Running "tup" will update all variants. For example, updating after modifying a C file that is used in all configurations will cause it to be re-compiled for each variant. As with any command that is executed, this is done in parallel subject to the constraints of the DAG and the number of jobs specified. To build a single variant (or subset of variants), specify the build directory as the target to "tup", just like with any partial update. For example:

$ tup build-default

To delete a variant, just wipe out the build directory:

$ rm -rf build-debug

If you build with variants, it is recommended that you always have a default variant that contains an empty tup.config file. This helps check that your software is always able to be built by simply checking it out and doing 'tup init; tup' without relying on a specific configuration.

When using in-tree builds, the resulting build outputs may rely on run-time files, placed in the source tree and not being processed by tup. Tup allows such files to be copied verbatim in the variant build directory by providing a built-in macro "!tup_preserve":

: foreach *.png |> !tup_preserve |>

Either a symbolic link or a copy of the source file will be created, depending on the OS and file system being used.

EXAMPLE

Parsing a :-rule may be a little confusing at first. You may find it easier to think of the Tupfile as a shell script with additional input/output annotations for the commands. As an example, consider this Tupfile:WARNINGS += -W WARNINGS += -Wall CFLAGS = $(WARNINGS) -O2 CFLAGS_foo.c = -DFOO : |> echo '#define BAR 3' > %o |> foo.h : foreach *.c | foo.h |> gcc -c %f -o %o $(CFLAGS) $(CFLAGS_%f) |> %B.o : *.o |> gcc %f -o %o |> programTup begins parsing this Tupfile with an empty $-variable set. The first "WARNINGS += -W" line will set the WARNINGS variable to "-W". The second line will append, so WARNINGS will be set to "-W -Wall". The third line references this value, so CFLAGS will now equal "-W -Wall -O2". The fourth line sets a new variable, called CFLAGS_foo.c, and set it to -DFOO". The first rule will create a new node "foo.h" in the database, along with the corresponding command to create it. Note this file won't exist in the filesystem until the command is actually executed after all Tupfiles are parsed.

The foreach :-rule will generate a command to compile each file. First tup will parse the input section, and use the glob operation on the database since a '*' is present. This glob matches foo.c and bar.c. Since it is a foreach rule, tup will run through the rule first using the input "foo.c", and again using the input "bar.c". The output pattern is parsed on each pass, followed by the command string.

On the foo.c pass, the output pattern "%B.o" is parsed, which will equal "foo.o". Now the command string is parsed, replacing "foo.c" for "%f" and "foo.o" for "%o". The $-variables are then expanded, so $(CFLAGS) becomes "-W -Wall -O2", and $(CFLAGS_foo.c)" becomes "-DFOO". The final command string written to the database is "gcc -c foo.c -o foo.o -W -Wall -O2 -DFOO". An output link is written to the foo.o file, and input links are written from foo.c and foo.h (the order-only input).

On the second pass through the foreach rule, the only difference is "bar.c" is the input. Therefore the output pattern becomes "bar.o", and the final command string becomes "gcc -c bar.c -o bar.o -W -Wall -O2 " since $(CFLAGS_bar.c) was unspecified.

For more examples with corresponding DAGs, see http://gittup.org/tup/examples.html

OTHER BUILD SYSTEMS

Tup is a little bit different from other build systems. It uses a well-defined graph structure that is maintained in a separate database. A set of algorithms to operate on this graph were developed in order to handle cases such as modifying an existing file, creating or deleting files, changing command lines, etc. These algorithms are very efficient - in particular, for the case where a project is already built and one or more existing files are modified, tup is optimal among file-based build systems. For other cases, tup is at least very fast, but optimality has not been proved.The primary reason for the graph database is to allow the tup update algorithm to easily access the information it needs. As a very useful side-effect of the well-defined database structure, tup can determine when a generated file is no longer needed. What this means is there is no clean target. Nor is there a need to do a "fresh checkout" and build your software from scratch. Any number of iterations of updates always produces the same output as it would if everything was built anew. Should you find otherwise, you've likely found a bug in tup (not your Tupfiles), in which case you should notify the mailing list (see CONTACT).

For more information on the theory behind tup, see http://gittup.org/tup/build_system_rules_and_algorithms.pdf

SEE ALSO

http://gittup.org/tupCONTACT

tup-users@googlegroups.com

Index

- NAME

- SYNOPSIS

- DESCRIPTION

- PRIMARY COMMAND: tup

- SECONDARY COMMANDS

- INI FILE

- OPTIONS FILES

- TUPFILES

- TUPFILE NOTES

- @-VARIABLES

- VARIANTS

- EXAMPLE

- OTHER BUILD SYSTEMS

- SEE ALSO

- CONTACT

This document was created by man2html, using the manual pages.

Time: 04:30:30 GMT, May 20, 2024