Clobber Builds Part 3 - Other Clobber Causes

Part 3 in the clobber build series. Today we'll examine some of the reasons that even a build system with perfect dependencies would still need clobbering.

Clobber Builds - Recap

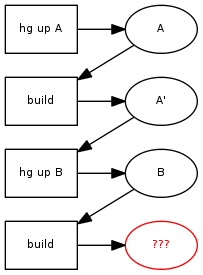

Part 1 of the series examined how missing dependencies in the Makefile result in clobbers. In Part 2, we showed how to modify the build system to eliminate the possibility of missing dependencies entirely. Now that one cause of clobbers has been solved, we'll broaden the scope a bit to see other ways in which the build system can do the wrong thing. Once again, we're looking for cases where we don't end up at B' in this diagram:

Changing Command-lines

(Note: Developers using SCons or Ninja can skip to the next section and feel smugly superior to those lowly make users, since your build system actually handles this case!)

It can be a little surprising the first time you use make to find out that it won't re-compile something when you add or remove flags from the command-line. For example, suppose we build with version A of the Makefile, and then build with version B where we add the -DENABLE_FOO flag:

Makefile Version A

foo.o: foo.c

gcc -c $< -o $@

clean:

rm foo.o

Makefile Version B

foo.o: foo.c

gcc -DENABLE_FOO -c $< -o $@

clean:

rm foo.o

Here's what the output looks like:

$ cat foo.c

#ifdef ENABLE_FOO

void foo(void)

{

}

#endif

void bar(void)

{

}

$ make

gcc -c foo.c -o foo.o

$ nm foo.o

00000000 T bar

# Update Makefile to version B

$ make

make: `foo.o' is up to date.

$ nm foo.o

00000000 T bar

# Wrong!

$ make clean && make

rm foo.o

gcc -DENABLE_FOO -c foo.c -o foo.o

$ nm foo.o

00000005 T bar

00000000 T foo

# Clobber build produces the correct result

By changing the command-line to add the appropriate -D flag and running a build, we expect that the foo() function is present in foo.o, but it's not. Then when we manually clean things up and re-run the build, we find the output has changed. This is not how a build system should work.

How Mozilla Handles Changing Command-Lines

In the mozilla-central codebase, we use the "hammer" approach, wherein we add dependencies for the Makefile to every rule:

# Dependencies which, if modified, should cause everything to rebuild GLOBAL_DEPS += Makefile $(DEPTH)/config/autoconf.mk $(topsrcdir)/config/config.mk

I call this the "hammer" approach because it essentially recompiles everything when any command-line may or may not have changed. For example, if I were to change the linking commands in config.mk (perhaps by modifying the arguments passed to expandlibs_exec.py), make would take the extra unnecessary step of recompiling every file in the tree. Although it would get the correct result in this case, since the libraries would be linked with my new flags, it would waste a lot of time getting there. All we really expect it to do is to re-link the things that need re-linking with the new flags, and nothing else.

Additionally, there are a number of included makefiles that are strangely absent from GLOBAL_DEPS. For example, if I wanted to change py_action in config/makefiles/functions.mk, I would find that no py_actions are re-executed with my changes. (Except of course for those py_actions that always run all the time for every build, even when nothing has changed! But that's another story.)

How the Linux Kernel Handles Changing Command-Lines

The Linux kernel tries to take a more targeted approach to handling changing command lines. Essentially it stores the previous command-line inside the dependencies file, and then uses a rule that always triggers on a build to compare the old command-line with the current one to see if we should recompile. Here's the previous Makefile that builds foo.c which has been hacked up with Linux' command-line change detection (parts from linux/scripts/Kbuild.include and parts from linux/scripts/Makefile.build):

PHONY = FORCE

any-prereq = $(filter-out $(PHONY),$?) $(filter-out $(PHONY) $(wildcard $^),$^)

arg-check = $(strip $(filter-out $(cmd_$(1)), $(cmd_$@)) \

$(filter-out $(cmd_$@), $(cmd_$(1))) )

if_changed_rule = $(if $(strip $(any-prereq) $(arg-check) ), \

@set -e; \

$(rule_$(1)))

squote := '

escsq = $(subst $(squote),'\$(squote)',$1)

make-cmd = $(subst \\,\\\\,$(subst \#,\\\#,$(subst $$,$$$$,$(call escsq,$(cmd_$(1))))))

CFLAGS += -DFOO

CFLAGS += -UFOO

cmd_cc_o_c = gcc $(CFLAGS) -MMD -c $< -o $@

define rule_cc_o_c

echo 'CC $<'; $(cmd_cc_o_c) && ./fixdep $(@:.o=.d) $@ '$(call make-cmd,cc_o_c)' > $@.tmp; mv -f $@.tmp $(@:.o=.d)

endef

all: foo.o

%.o: %.c FORCE

$(call if_changed_rule,cc_o_c)

.PHONY: $(PHONY)

-include foo.d

This works quite a bit better - if the command-line for one .c file is changed because of a target-specific flag, only that .c file is recompiled. All the other objects in the tree can be reused from the last build. However, this approach has a few downsides:

- The use of $(filter-out) in arg-check means that the order of the arguments don't matter. So if we changed the command-line from 'gcc -c foo.c -o foo.o -DDEBUG -UDEBUG' to 'gcc -c foo.c -o foo.o -UDEBUG -DDEBUG', this implementation would not pick up the new command-line, and so foo.c would not be compiled with debugging enabled. This particular case probably doesn't come up too often (and could probably be fixed by fiddling with the macros), but I consider any surprise behavior that will require hours of developer time to track down to be a severe deficiency in the build system.

- Look again at the ridiculous Makefile construct above. Do you honestly think it is worthwhile for every project that uses make to implement something like this just to get a sane behavior for a common case like changing a command-line? Or should this instead be implemented as part of the core build system for all projects to use?

Removing Rules

(Note: Developers using SCons or Ninja need to pay attention again. Your build system fails here, just like make).

In the previous section we looked at what happens when we change the command line for an existing rule. What happens if we add or remove a rule? Here are our Makefiles, which we'll keep explicit (and unnecessarily redundant) for now so it's obvious what's happening:

Makefile Version C

all: foo.o foo.o: foo.c gcc -c $< -o $@ clean: rm foo.o

Makefile Version D

all: foo.o bar.o foo.o: foo.c gcc -c $< -o $@ bar.o: bar.c gcc -c $< -o $@ clean: rm foo.o bar.o

Now we'll start with version C and then incrementally build version D:

$ make gcc -c foo.c -o foo.o $ ls *.o foo.o # Call the current state of things "Checkpoint C" # Now update the Makefile to version D $ make gcc -c bar.c -o bar.o $ ls *.o bar.o foo.o

So far so good! After building with the first Makefile, we built foo.o, as expected. Then we added a new target for bar.o, and when we built, we ended up with both objects. Hooray!

Now we'll do something totally unprecedented and unexpected in the world of software development. We are going to undo our changes in the Makefile and go back to version C. (Gasps and murmurs arise from the crowd...) And then we're going to incrementally build. (Tension builds...) And we expect to be in the exact same place we were at Checkpoint C. (Oh the humanity!) Can it be done?! Not if you're using make (or SCons, or ninja, or ...)

# (Continued from before) # Undo the Makefile changes, so we are back to version C $ make make: Nothing to be done for `all'. $ ls *.o bar.o foo.o # Wrong! We should only have foo.o here, as in Checkpoint C $ make clean rm foo.o $ make gcc -c foo.c -o foo.o $ ls *.o bar.o foo.o # Still wrong! Even a clean build didn't fix it.

This simple example demonstrates a few problems with make. First, make has no knowledge of what it did in previous builds. Every time you run make, it looks at the file-system as it currently exists, and reads the Makefiles as they currently exists. When it reads version C of the Makefile again in the above example, it has no idea that bar.o exists from a previous run, and that it needs to be removed.

Second, this shows the problem with implementing a clean target. In both versions of the Makefile, the clean target would perfectly clean up all of the outputs of make. However, when we have version C of the Makefile, make doesn't know how to clean the outputs leftover from version D. That's why even doing a clobber build failed to get the correct output! You can see why many projects and substandard build systems enforce building in a completely separate directory. Implementing a correct build system is hard, but doing 'rm -rf objdir' is not.

Stay tuned for the next part of the series, where we'll look at this problem in more detail, as well as how to solve it in the core build system so that all projects can have correct incremental builds.

comments powered by Disqus