Moving Automation Steps in Tree

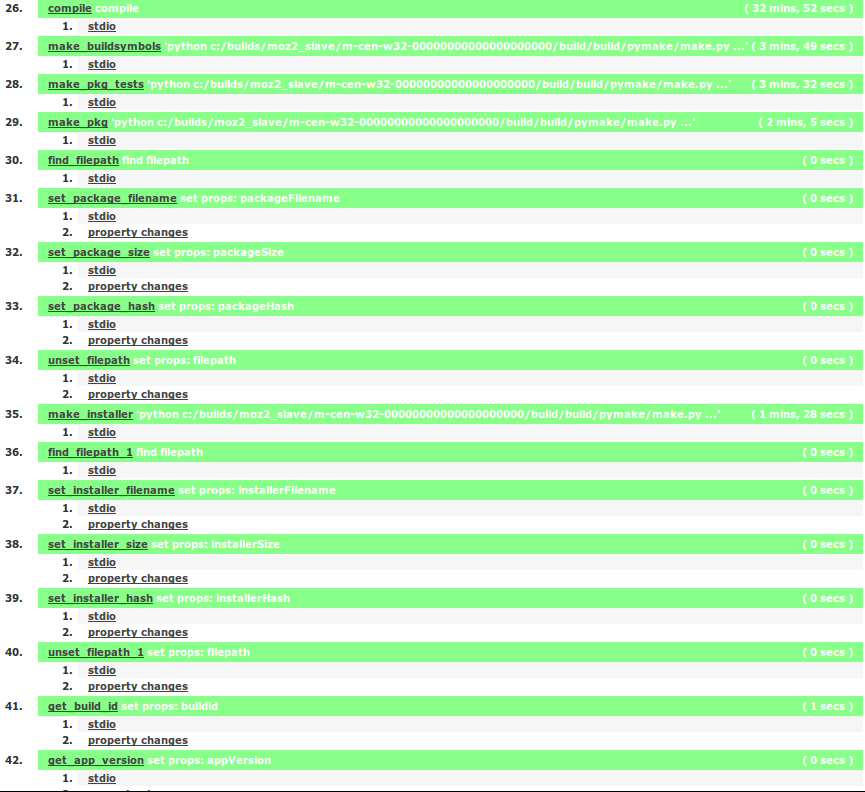

In bug 978211, we're looking to move the logic for the automation build steps from buildbot into mozilla-central. Essentially, we're going to convert this:

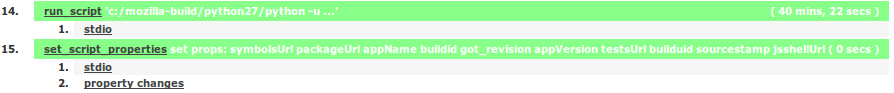

Into this:

What were once many steps in buildbot are now consolidated inside a single "mach build" call from mozharness (the "run_script" step in the picture). The actual steps are still largely the same internally, but this gives us greater control and flexibility in the future since we don't have to involve both the tree and buildbot. Another benefit is that since all steps are managed by the build system, we can parallelize independent steps and in theory provide a faster turnaround time. For example, in a Windows mozilla-central build, after the initial "compile" phase, buildbot would call each of these steps individually:

- make buildsymbols (3m49s)

- make package-tests (3m32s)

- make package (2m5s)

- make installer (1m28s)

- make upload (24s)

- make l10n-check (1m43s)

- make check (7m38s)

- Total time: 20m39s

(Note, this list is specific to the Windows build type mentioned. Each platform and build type will have its own set of steps that are different from this. Some platforms/build types will also build update packaging, upload symbols, or re-do some of these steps with the "pretty" package names, etc.)

In reality, the dependency graph among the steps looks like this:

As you can see, we effectively have three tiers of automation steps for this particular build type. Looking at the graph, you can see that most things depend on buildsymbols, except for package-tests and installer. This means we can run all three of these steps in parallel, and we would effectively get installer and package-tests for free (since we need to wait for buildsymbols to finish before starting on the rest). The long path in this DAG is the buildsymbols -> check chain, which provides a minimal exeuction time of 11m27s given maximum parallel efficiency.

The Good News

With a parallel build, the end-to-end time clocks in at 13m9s, so we save about 7m30s as compared to running everything sequetially. In addition, the real execution time is only ~15% above the theoretical minimum, so there's not much more gain to be had through parallelization. So, at first glance, things appear to be quite good with the new setup!

The Bad (and confusing) News

The numbers discussed so far are all for clobber builds. So when we do package-tests for example, there is no previous dist/test-stage directory that needs to be removed. When re-running all of these steps for an incremental build, there is paradoxically more work involved. None of these automation steps re-use previous results where possible (as far as I can tell), so in the incremental case, we need to clear out the old cruft and then redo much of the same work. For the buildbot setup, I ran a few incremental builds, which were fairly consistent and averaged a 20m31s runtime. Strangely, this is even slightly faster than in the clobber case. However, we aren't so lucky when running everything through mach. The new non-clobber case averaged in at 22m35s. This is not only slower than the sequential case, but almost twice as long as the clobbered parallel case! What is going on here?

I tried to isolate a few things to see what might be the cause. From looking at the build logs, I could see that the package-tests step was taking 11 minutes, compared to only 5 in the sequential test. One theory I had is that the initial 'rm -rf dist/test-stage' in package-tests was conflicting with some other heavy disk action from another build step, causing excessive slowdowns from swapping or the like. So, the first thing I tried is to force the automation steps to use -j1 when running the automation target. This way everything should run sequentially in make, just as if we were running it from buildbot. Unfortunately, this made things even worse, with a total turnaround time of 32m35s, and package-tests was still taking over 11 minutes!

One other thing I suspected was the output buffering that we're using from make-4.0. Since the automation steps spew a lot of output, reading through a log can be very confusing when multiple steps are run in parallel. Fortunately, make-4.0 allows you to specify a flag that causes the output to be buffered, so the whole log of say, the buildsymbols step, gets dumped to the screen only after it has completely finished. Is it possible that the buffering was somehow causing an extra delay? It turns out that there's no impact - this build was still over 32 minutes.

At this point, I'm pretty confused. As far as I can tell, the only difference between the two setups now is that one does 'make foo; make bar', and the other does 'make foo bar', yet the runtime varies so much. Maybe there is some other interaction with the Windows build machine? Or maybe it's more random that it appears and I'm just getting unlucky? I can't reproduce the behavior on my Windows VM, where running in parallel is always faster. If you have any ideas for things to check, please post a comment and let me know!

comments powered by Disqus